Blog

Can artificial intelligence prevent suicide? How real -time monitoring can be another substantial step in mental care

Suicide is represented by one of the The most complex and painful challenges in public health. One of the main difficulties in preventing suicide is knowledge when someone is fighting.

Suicidal thoughts And behavior can come and leave quickly and are not always present when someone sees a doctor or therapist, which makes it arduous to detect standard check -ups.

Today, many of us utilize digital devices Follow our physical health: Counting steps, sleep monitoring or checking the screen time. Scientists are now I’m starting to utilize similar tools to better understand mental health.

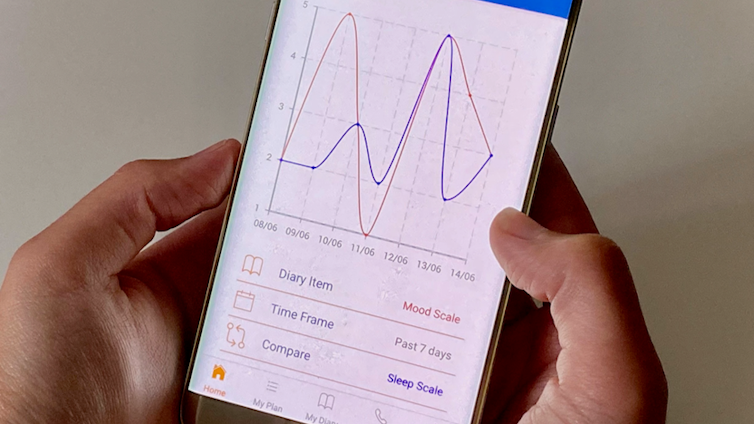

One method, called Ecological temporary assessment (EMA), collects information about the mood, thoughts, behaviors and surroundings of a person using a smartphone or a wearing device. He does this by encouraging a person to enter information (lively EMA) or collecting it automatically using sensors (passive EMA).

Get your messages from real experts, straight to the inbox. Sign up to our daily newsletter to receive the latest reports from news and research in Great Britain, from politics and business to art and science.

Studies have shown that EMA can be sheltered Monitoring the risk of suicidewhich includes a number of experiences, from suicide to rehearsals and ended suicide.

Studies with adults Show that this type of monitoring does not boost the risk. Instead, he gives us a more detailed and personal view of what someone is going through, a moment after a while. So how can this information actually lend a hand someone at risk?

Adaptation interventions

One exhilarating application is to create adaptive interventions: personalized answers in real time, provided directly via a person’s phone or device. For example, if someone’s data show signs of anxiety, their device can gently encourage them to follow their step in the scope of their personal security plan, which they have previously created with a specialist in mental health.

Safety plans They are proven tools in preventing suicides, but they are most helpful when people can access them and utilize them when they are most needed. These digital interventions can offer appropriate support when it matters in your own environment.

There are still vital questions: what data changes should people cause a warning? When is the best time to offer lend a hand? What form should it lend a hand?

These are types of questions that artificial intelligence (AI) – especially machine learning – lend a hand us answer.

Machine learning is already used to build models that they can Predict the risk of suicide noticing subtle changes in feelings, thoughts or behavior of a person. He was also used to Predict suicide indicators in larger populations.

These models worked well on the data on which they were trained. But there are still fears. Privacy is high, especially when social media or personal data is involved.

Is also no variety In data used to train these models, which means that they may not work as well for everyone. The utilize of models developed in one country or setting to another is arduous.

Despite this, research shows that machine learning models can predict the risk of suicide more accurately than customary tools used by clinicians. This is why Mental health guidelines Now I recommend moving away from using straightforward risk results to decide who will receive care.

Instead, they suggest a more adaptable, focused approach on the person, which is based on open conversations and planning with an at risk.

Ruth MeliaIN CC By-Sa

Forecasts, accuracy and trust

In my researchI looked at how AI is used with EMA in suicide research. Most research concerned care in hospitals or mental health clinics. In these settings, EMA was able to predict such things as suicidal thoughts after discharge.

While many studies we wondered how precise their models were, they looked less at how often the models make mistakes, such as predicting that someone is threatened when it is not (false positive), or there is a lack of someone at risk (false negatives). To improve this, we have developed a reporting guide to make sure that future research is clearer and more complete.

Another promising area is the utilize of artificial intelligence as a support tool for mental health specialists. Analyzing huge sets of data from health services, artificial intelligence can lend a hand you predict how someone advises and which treatments can work for him best.

But to work, professionals must trust technology. This is where explaining artificial intelligence appears: systems that not only give the result, but also explain how they got there. This makes it easier for clinicians to understand and utilize AI insights, just like today they utilize questionnaires and other tools.

Suicide is a destructive global problem, but the progress in AI and real -time monitoring offer Fresh Hope. These tools are not a medicine, but they can lend a hand provide adequate support at the right time in a way we have never been able to.