Blog

Why Google Trusts Substantial Brands (But Not You)

As the web inexorably fills with AI-driven junk, searchers and search engines are becoming increasingly skeptical of content, brands, and publishers.

Thanks to generative artificial intelligence, it is the easiest ever was the creation, distribution and retrieval of information. But thanks to the recklessness of LLM and the recklessness of many publishers, it is rapid becoming the hardest It has never been uncomplicated to distinguish real, good information from repeated, bad information.

This one-two punch changes the way Google and searchers filter information by deciding distrust brands and publishers by default. We are moving from a world where trust had to be lost to a world where it had to be earned.

As SEO specialists and marketers, our most significant task is to get out of the “default block list” and get on the allow list.

Given the huge amount of content on the internet — including so much redundant AI-generated content — it is too much of a burden for humans and search engines to assess the truth and credibility of information on a case-by-case basis.

We know that Google I want to filter out AI garbage.

Last year we saw five major updates, three dedicated spam updatesand a huge push for EEAT. As these updates are repeated, indexing of up-to-date sites is incredibly snail-paced — and one might say more selective — with more pages captured Crawled – currently not indexed purgatory.

But that’s a tough problem to solve. AI content isn’t uncomplicated to spot. Some AI content is good and useful (just as some human content is bad and useless). Google wants to avoid watering down its index with billions of pages of bad or repetitive content—but that bad content is looking more and more like good content.

This problem is so tough that Google has hedged its bets. Instead of evaluating the quality of each article, Google has seemingly cut the Gordian knot by opting instead to elevate huge, trusted brands like Forbes, WebMD, TechRadar, or BBC to many more positions in the SERPs.

After all, it’s much easier for Google to police a handful of huge content brands than many thousands of smaller ones. By promoting “trusted” brands—brands with some history and public accountability—to dominant positions in popular SERPs, Google can effectively immunize many search experiences from AI risk.

(Thus making the “Forbes sloppiness” problem worse, but Google clearly sees this as the lesser evil.)

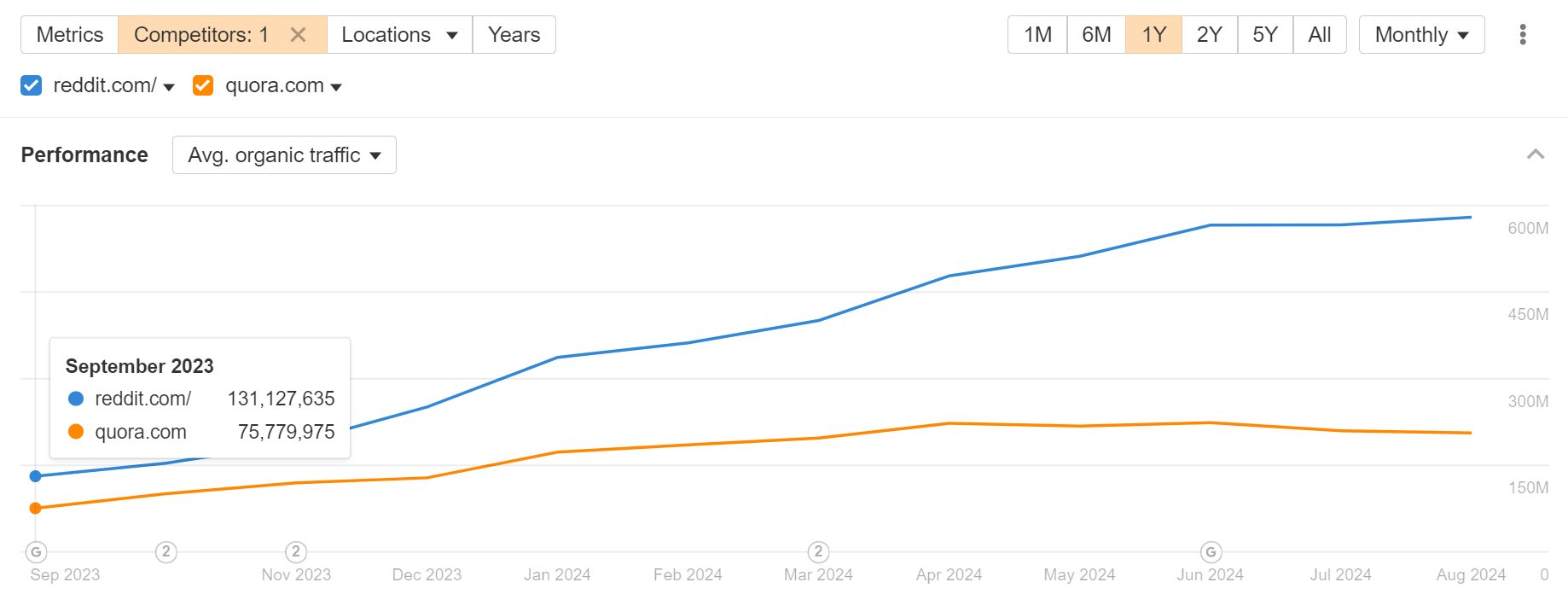

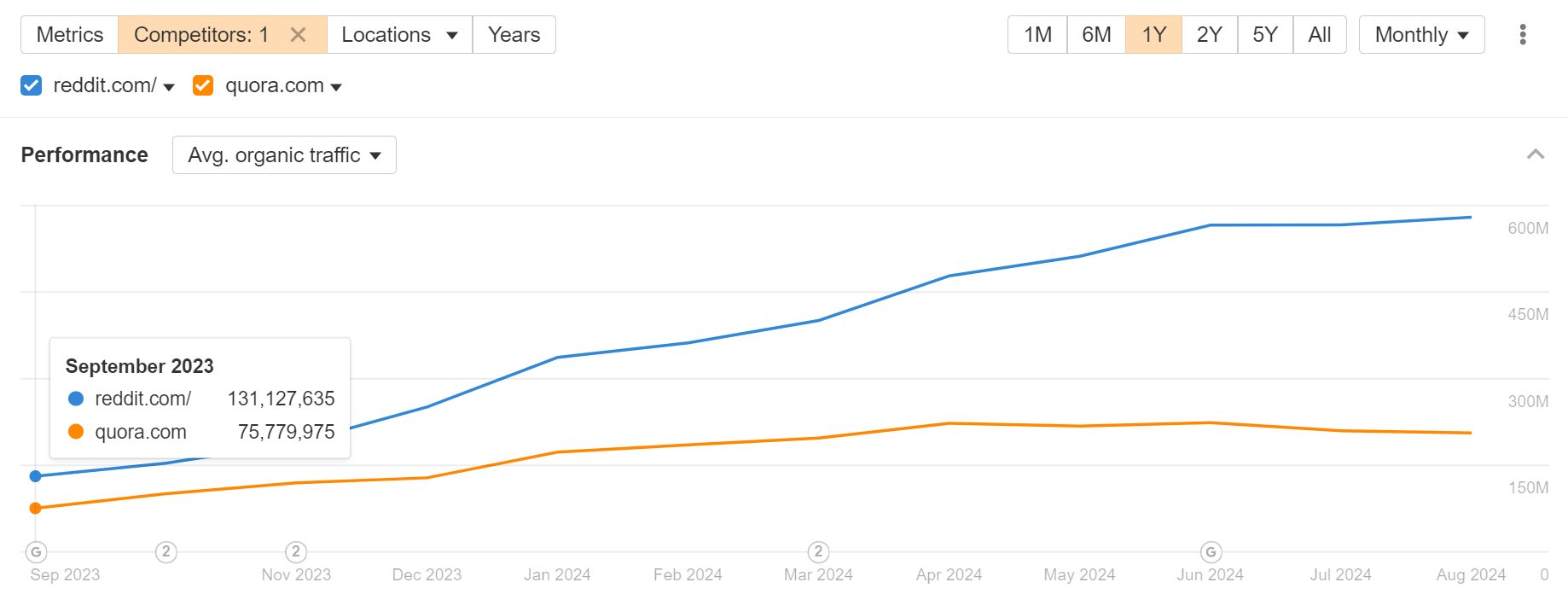

Similarly, UGC sites like Reddit and Quora have their own built-in quality control mechanisms — upvotes and downvotes — that allow Google to outsource the moderation burden:

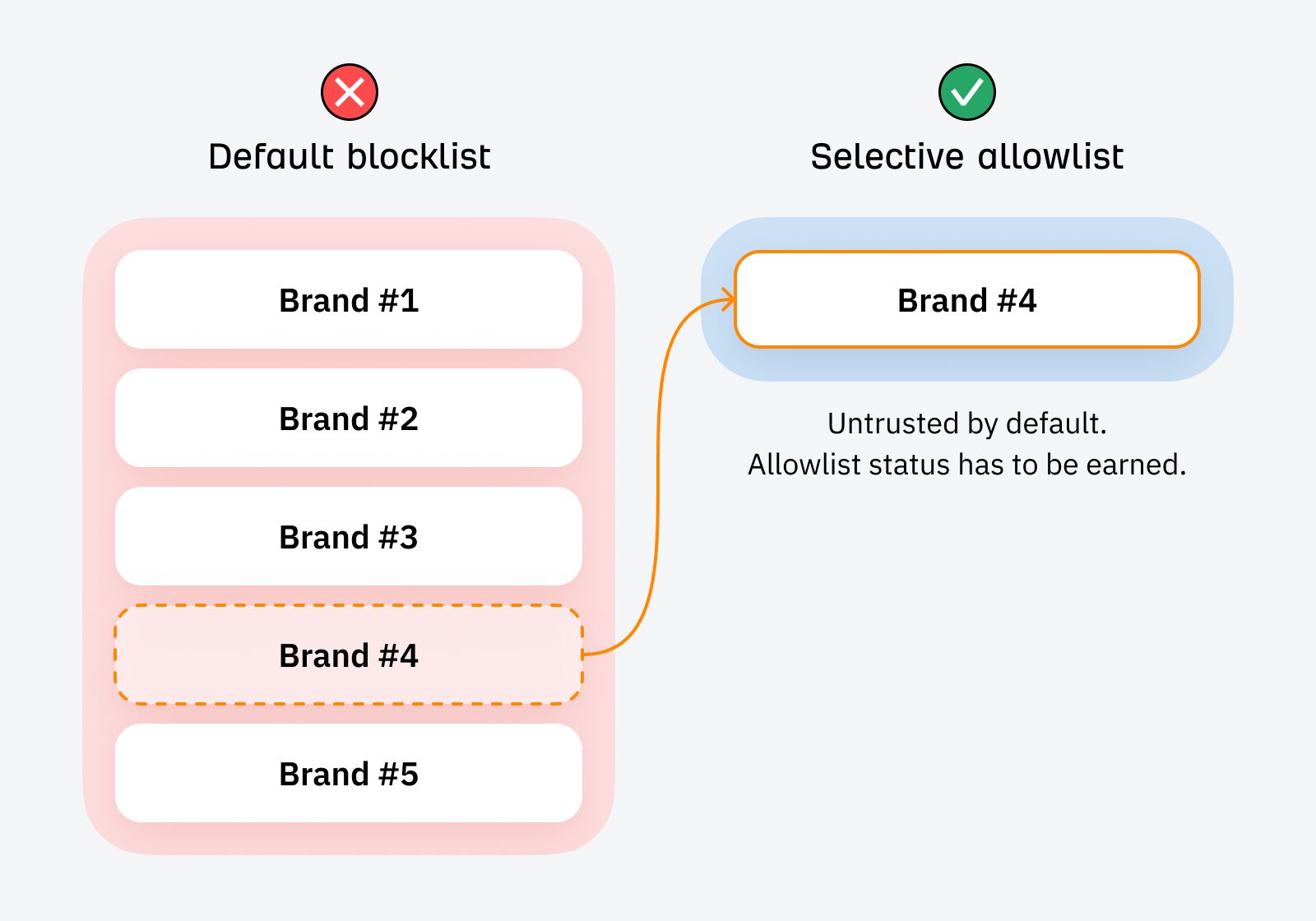

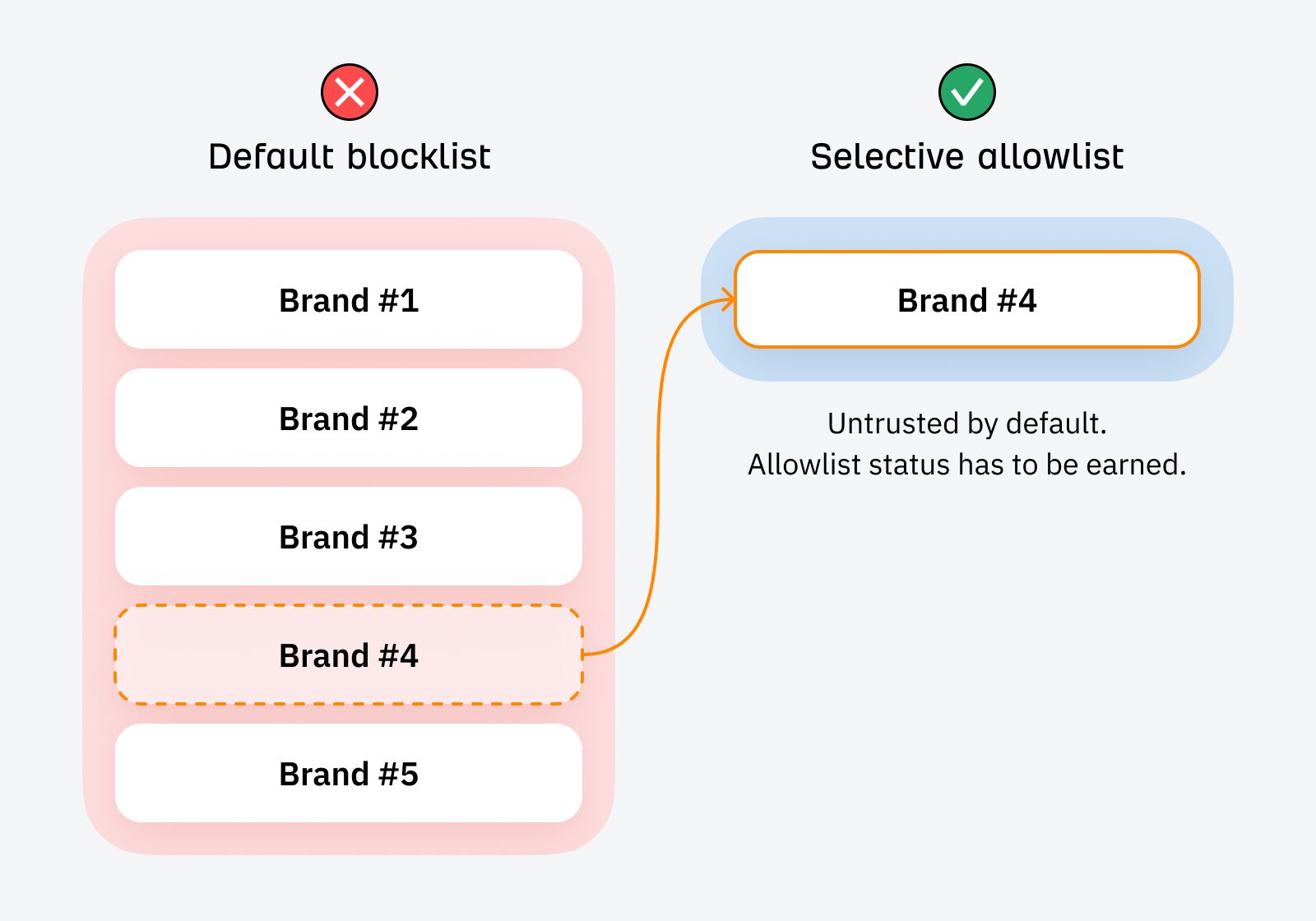

In response to the staggering amount of content being created, Google appears to be adopting a “default blacklist” approach, defaulting to distrusting up-to-date information while giving preference to a handful of trusted brands and publishers.

Novel, smaller publishers are blacklisted by default; companies like Forbes, TechRadar, Reddit, and Quora have been elevated to whitelist status.

Hitting the “boost” button for huge brands may be a short-lived measure by Google until the company refines its algorithms, but I still think it reflects a broader change.

As Bernard Huang from Clearscope said in a webinar we hosted together:

“I think in the age of the internet and endless content, we’re moving towards a society where a lot of people will default to blocking everything, and I’ll opt for a whitelist, you know, the Superpath community or Ryan Law on Twitter… They’re turning to communities and influencers as a way to continue to get content that they consider highly signal or trustworthy.”

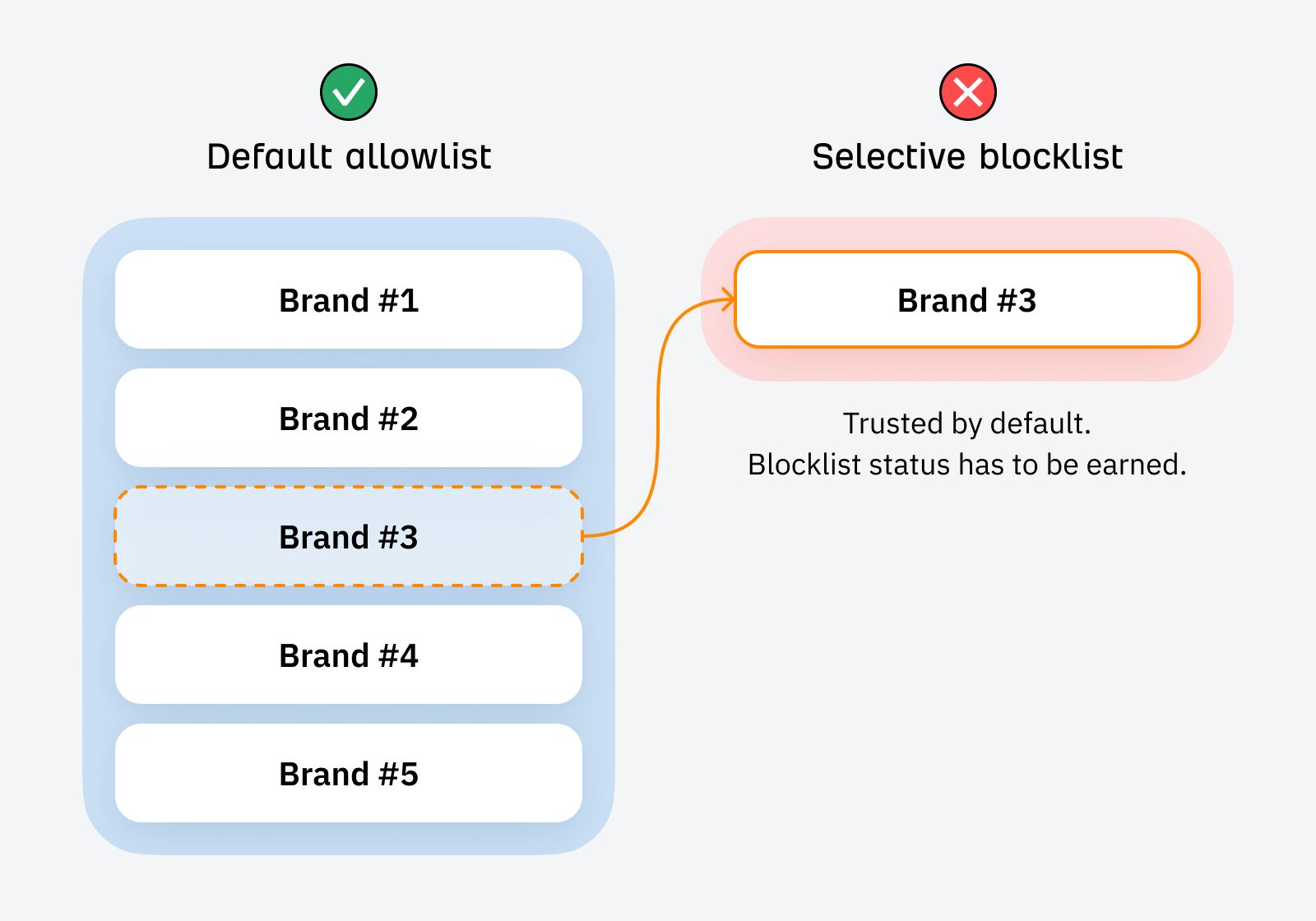

In the pre-AI era, brands were trusted by default. They had to actively violate trust to be blacklisted (by publishing something untrustworthy or making an obvious factual inaccuracy):

But today, with most brands racing to produce AI-related junk, it’s safest to just assume that every up-to-date brand we come across is guilty of the same sin —until proven otherwise.

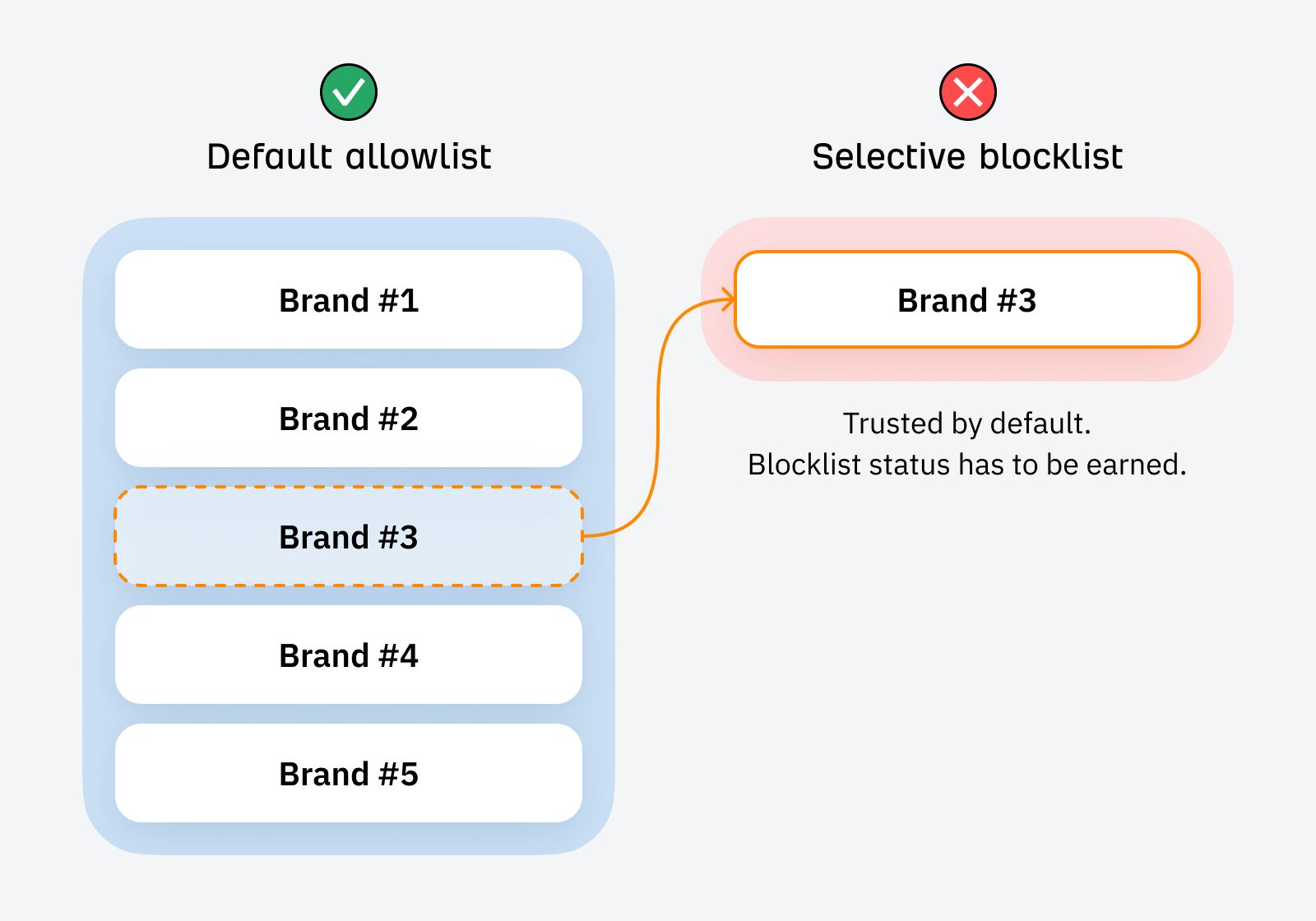

In the age of information overload, up-to-date content and brands will end up on the default blocklist, and whitelist status has to be earned:

In the AI era, Google is turning to gatekeepers, trusted entities that can vouch for the credibility and authenticity of content. Faced with the same problem, individual users will also have to grapple with it.

Our task is to become a trusted guardian of information.

Smaller, newer brands start their business with a trust deficit.

In fact, the pre-AI marketing playbook of simply publishing useful content is no longer enough to achieve success outside trust deficit and the transition from blacklist to allowlist. The game has changed. The marketing strategies that allowed Forbes et al. to build a brand moat will not work in today’s companies.

Novel brands need to go beyond routine information sharing and combine it with a clear demonstration credibility.

They need to make it very clear that they put effort and thought into creating the content; show that they care about the end result of what they are posting (and that they are willing to accept any consequences that may result); and clearly communicate their motivations for creating the content.

Meaning:

- Be careful what you post. Don’t be a DIYer; focus on topics where you have credibility. Measure yourself as you would NO publish what you do.

- Create content that aligns with your business modelSubdirectories with coupon codes and affiliate spam do not aid you gain the trust of skeptical users (or Google).

- Avoid “content sites.” Many of the sites most affected by HCU are “content sites” that exist solely for the purpose of monetizing site traffic. Content will be more credible when it supports a real, actual product.

- Express your motivations clearly and transparently. Clearly demonstrate who you are, why (and how) you created your content, and what benefits you gain from it.

- Add something unique and original to everything you post. It doesn’t have to be complicated: run plain experiments, put in more effort than your competitors, and base everything on first-hand experience (I wrote about that in detail here ).

- Ask real people to create content. Encourage them to showcase their achievements with photos, anecdotes, and author biographies.

- Build a personal brand. Brand your impersonal company into something associated with real, live people.

- Apply Google’s gatekeepers to your advantage. If Google tells you so, he really trusts Reddit content… well… maybe you should try distributing your content and ideas via Reddit?

- Become a goalkeeper for your audience. What would it mean to become a trusted gatekeeper for your audience? Limit what you share, carefully curate third-party content, and be willing to vouch for everything you post.

Final thoughts

The block list is not literal blacklist, but it’s a useful mental model for understanding the impact of AI generation on search.

The internet has been poisoned by AI content; everything created from now on lives in a shadow of suspicion. So accept that you start from a place of suspicion. How can you earn the trust of Google and searchers?